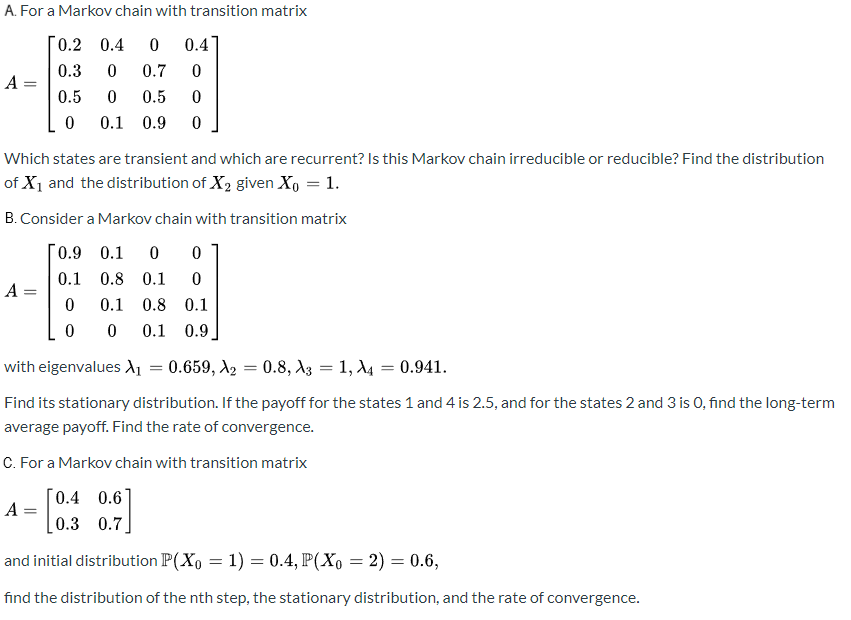

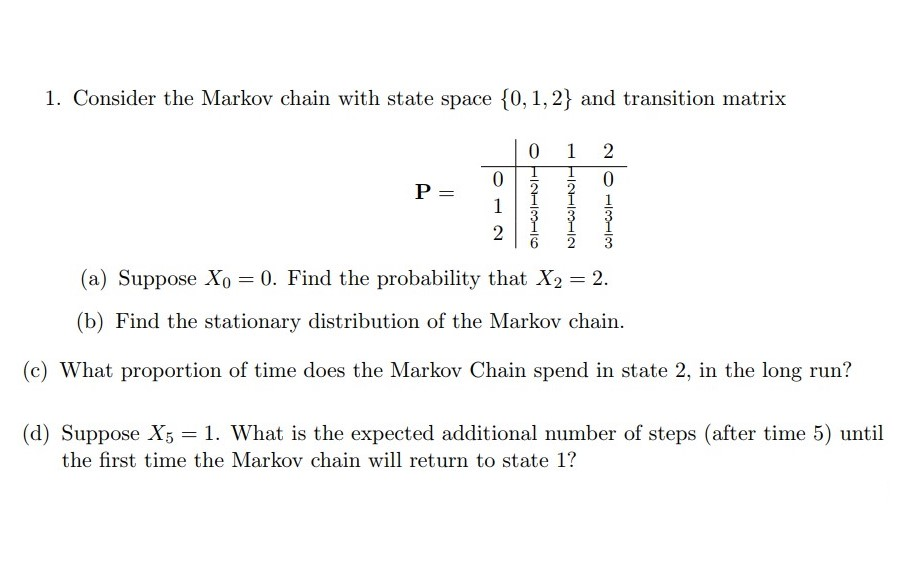

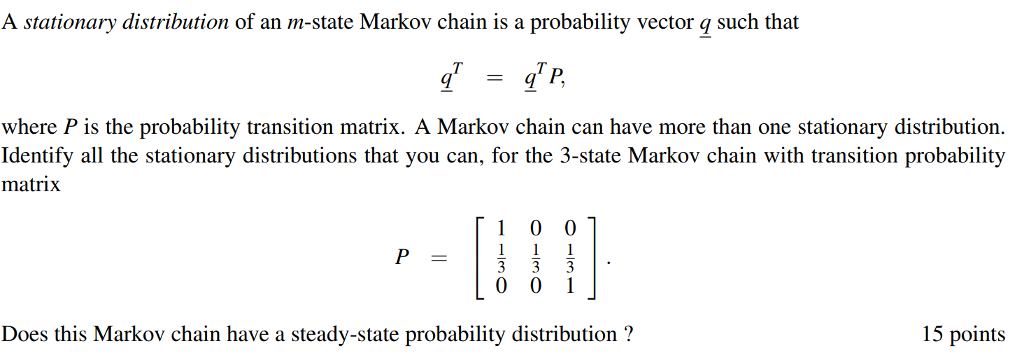

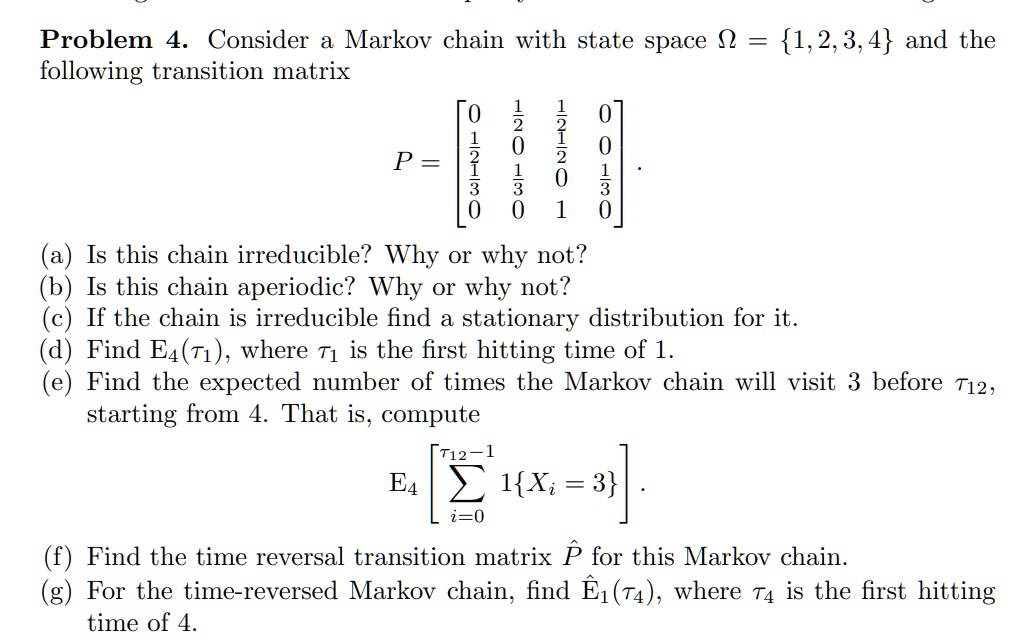

SOLVED: Problem 4. Consider Markov chain with state space n = 1,2,3,4 and the following transition matrix 0 0 6 J P 0 J 8 8 Is this chain irreducible? Why O

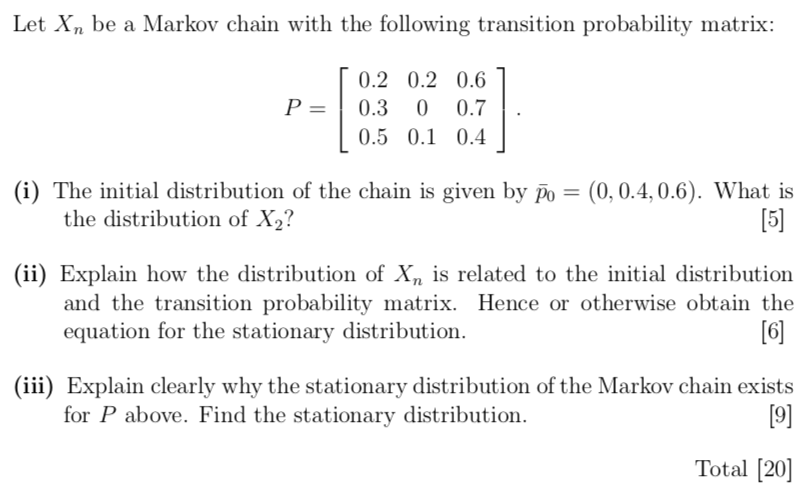

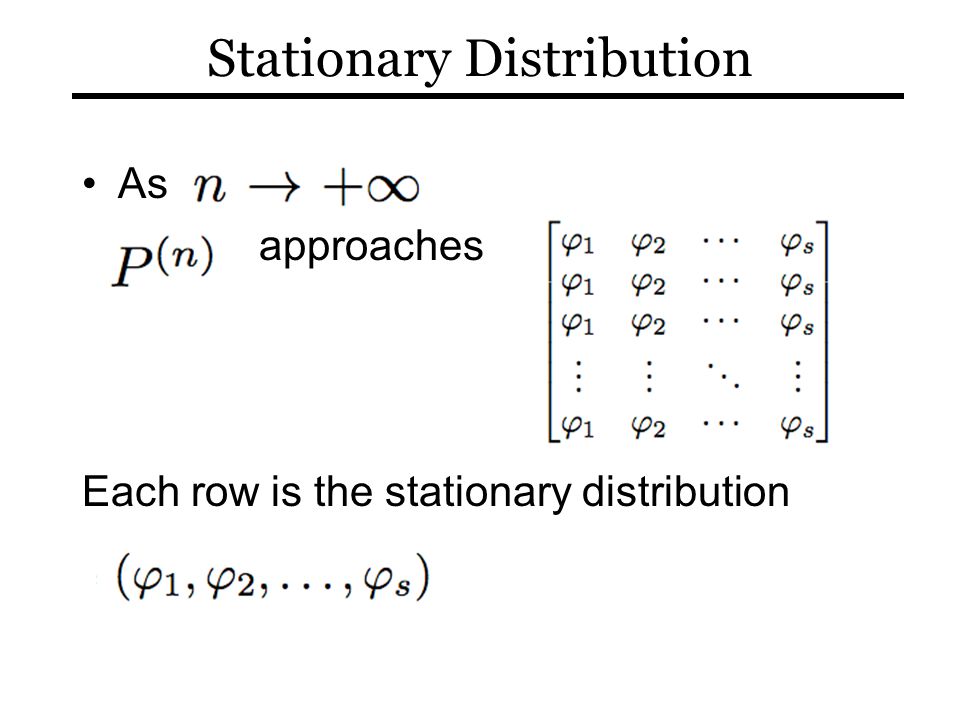

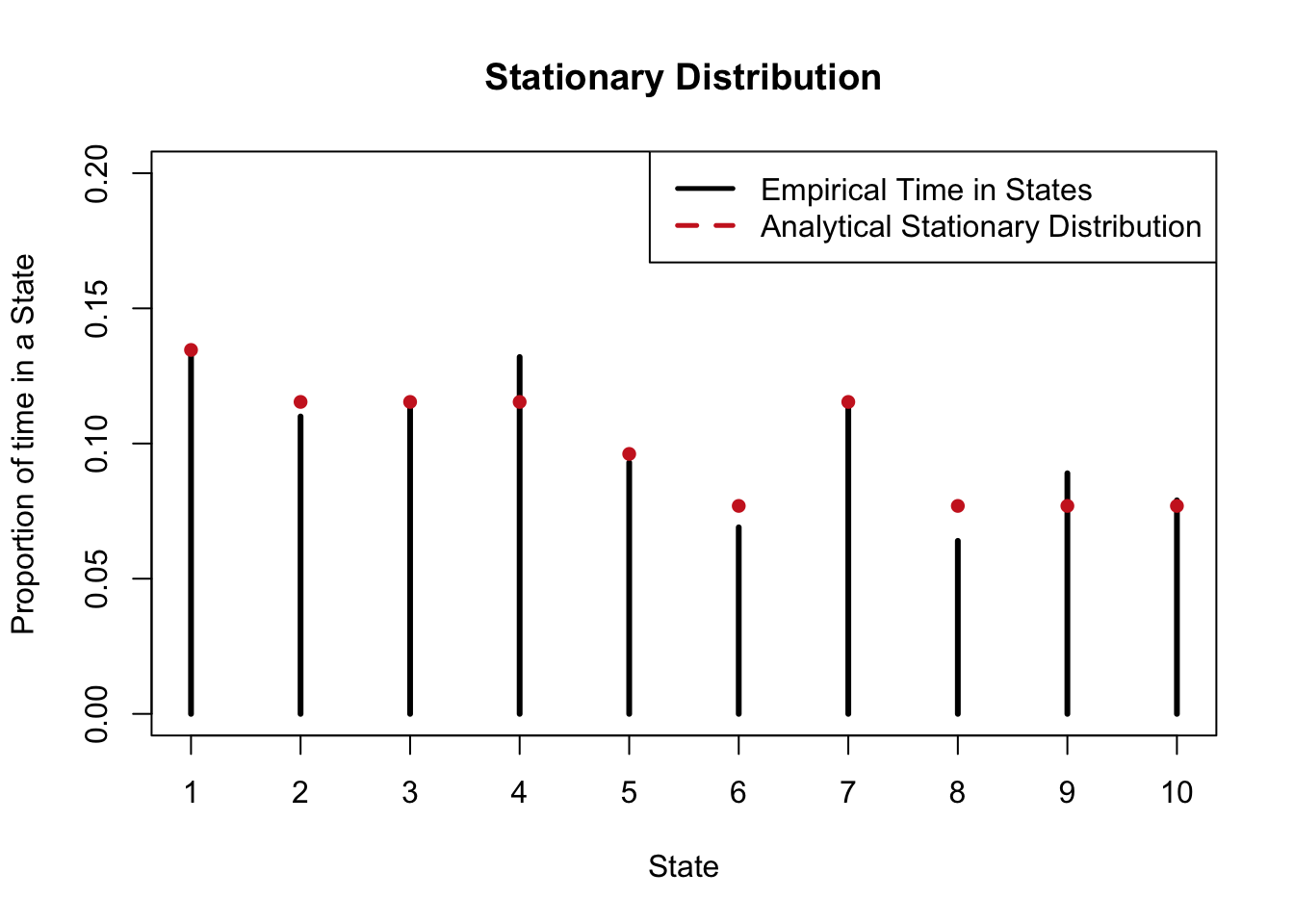

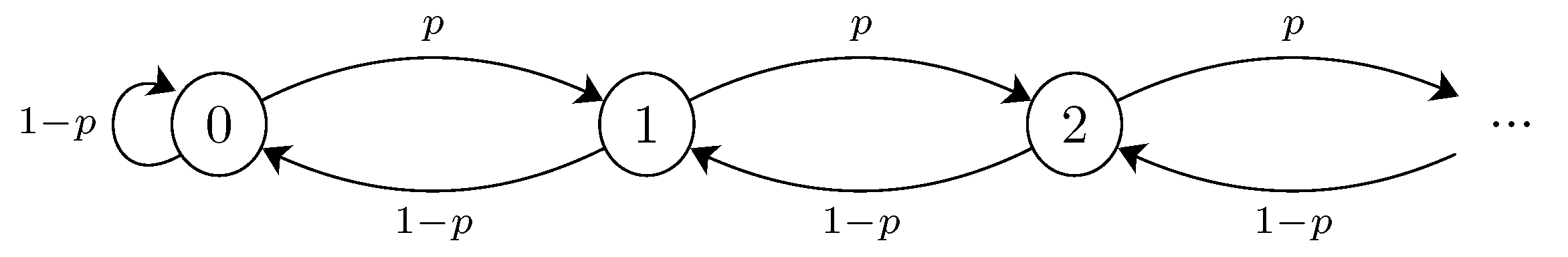

probability theory - Find stationary distribution for a continuous time Markov chain - Mathematics Stack Exchange

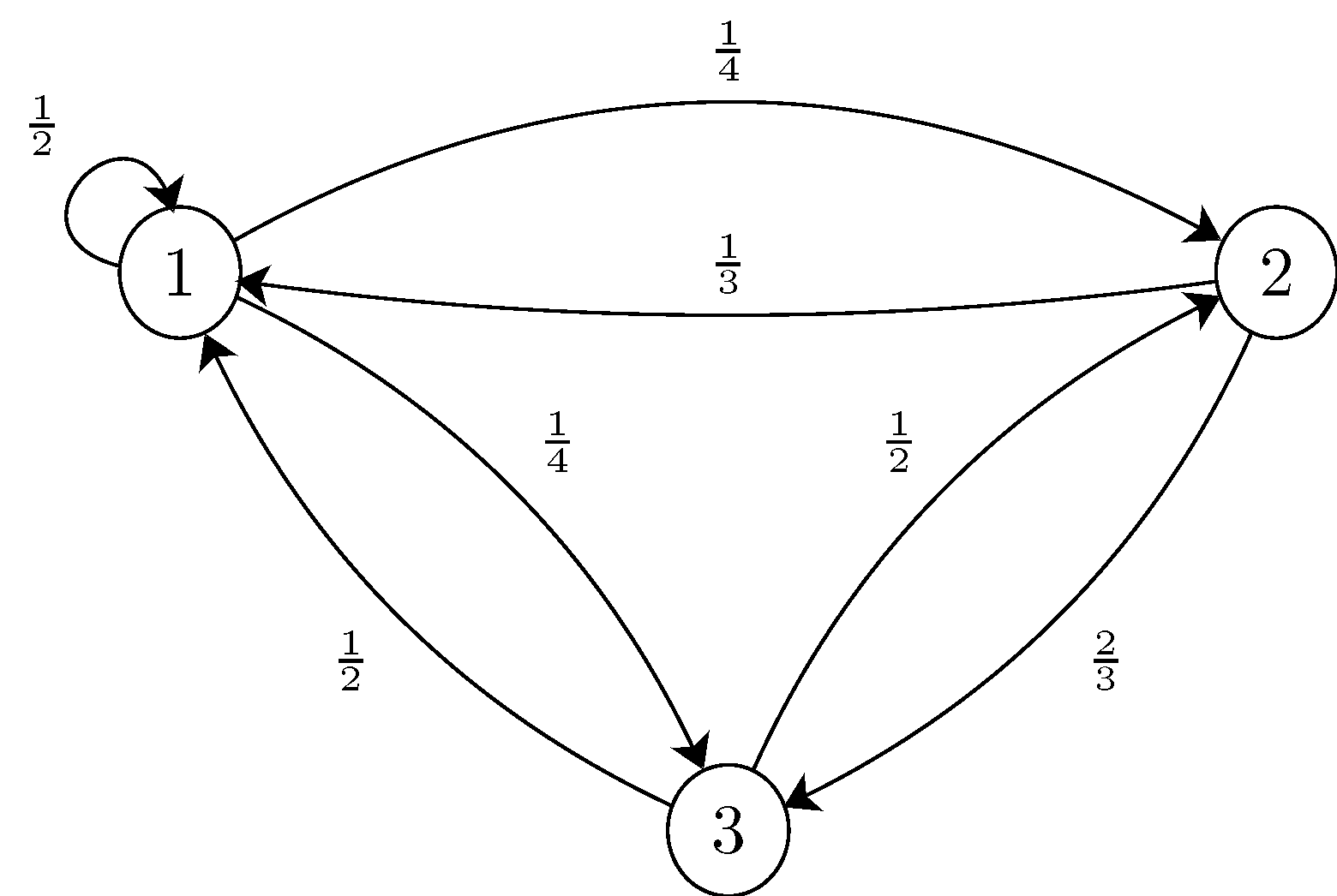

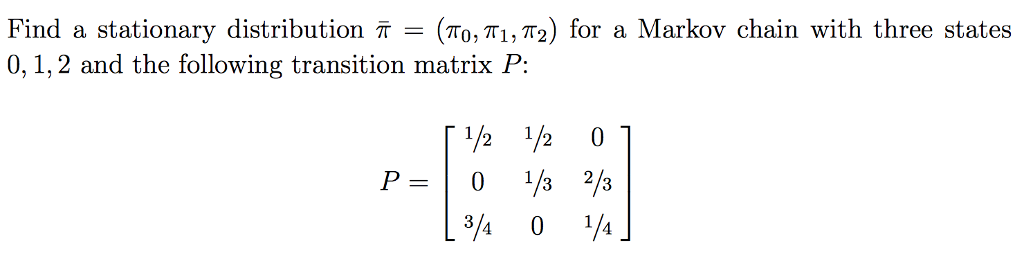

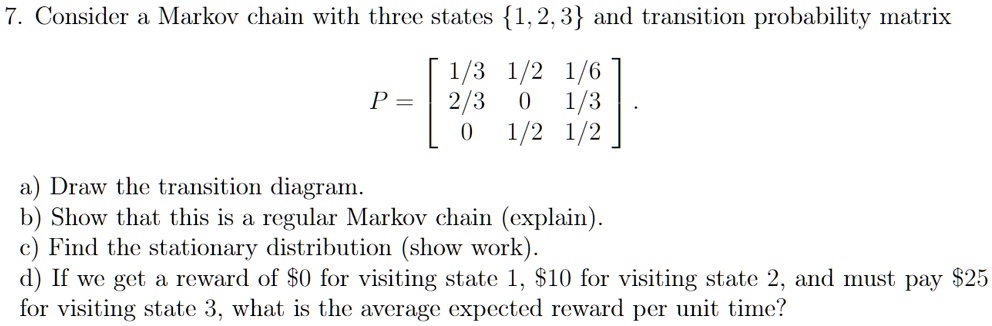

SOLVED: Consider a Markov chain with three states 1,2,3 and transition probability matrix 1/3 1/2 1/6 P = 2/3 1/3 1/2 1/2 Draw the transition diagram b Show that this is a